Generating Point Clouds

If we do want robots to learn about the world, we can use computer vision. We can employ traditional methods. Build up a full-fledged model from corner detectors, edge detectors, feature descriptors, gradient descriptors, etc. We can also use modern deep learning techniques. One large neural network hopefully captures similarly or even better abstractions compared to the conventional computer vision pipeline.

Computer vision is not the only choice though! In recent years there is a proliferation of a different type of data: depth data. Collections (or clouds) of points represent 3D shapes. In a game setting the Kinect was a world-shocking invention using structured light. In robotics and autonomous cars LIDARs are used. There is huge debate about which sensors are gonna “win”, but I personally doubt there will be a clearcut winner. My line of reasoning:

- Humans use vision and have perfected this in many ways. It would be silly to not use cameras.

- Depth sensors can provide information when vision gets obstructed.

- Humans use glasses, microscopes, infrared goggles, all to enhance our senses. We are basically cyborgs.

- Robots will benefit from a rich sensory experience just like we do. They want to be cyborgs too.

Point clouds

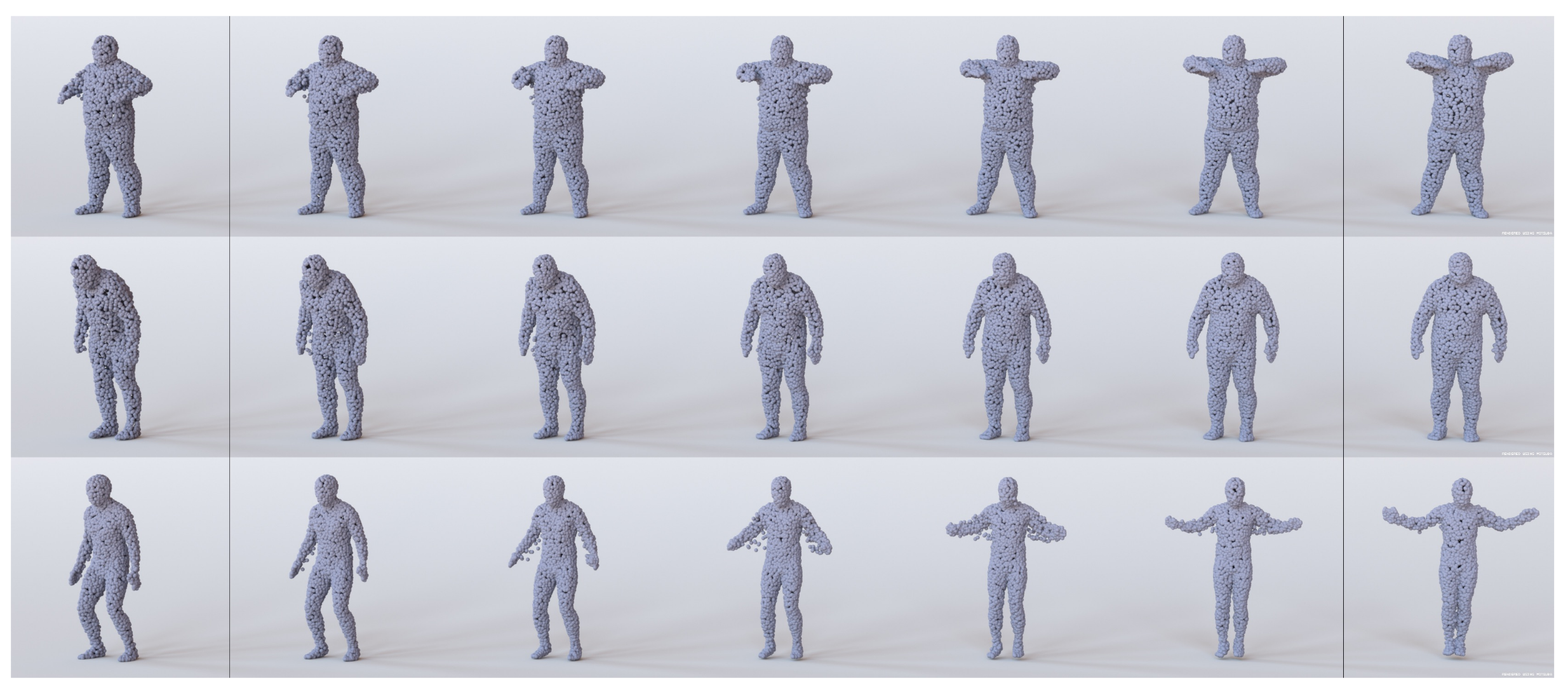

Point clouds are quite a good description. Objects are represented by individual points in a 3D space. By making the points a bit bigger you can easily figure out the shapes yourself (see the figure below).

A group of researchers who started to study point clouds are Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, and Leonidas Guibas in the paper Learning Representations and Generative Models for 3D Point Clouds from Leonidas Guibas’ Geometric Computation group in the Computer Science Department of Stanford University. Side note: prof. Guibas obtained his PhD under the supervision of Donald Knuth. You can reach Panos via his website. I haven’t found his Twitter account.

There are two main reasons why point clouds should be treated different from pixels in a neural network:

- The convolution operator works on a grid. Encoding 3D data on a grid would encode a lot of empty voxels. This means that for point clouds we cannot just convolution.

- Point clouds are permutation invariant. Only the 3D position of a point matters, their id does not. Points in a point cloud can be numbered in any way. It still describes the same object. A comparison operator between two point clouds need to take this into account. Preferably the latent variables will also be permutation invariant.

Permutation invariant distance metrics

There are permutation invariant distance metrics available. The authors describe the Earth Mover’s Distance (EM). This is a concept discovered by Gaspard Monge in 1781 on how to transport soil from one place to the next with minimal effort. Let us define the flow \(f_{i,j}\) between location \(i\) and \(j\) with distance \(d_{i,j}\), then the EM distance between location \(i \in P\) and \(j \in Q\) is as follows:

\[d_{EM}(P,Q) = \frac{ \sum_{i=1}^m \sum_{j=1}^n f_{i,j} d_{i,j} }{ \sum_{i=1}^m \sum_{j=1}^n f_{i,j} }\]The individual flow is multiplied with the corresponding distance. The overall sum is normalized with the overall flow. In mathematics this is known as the Wasserstein metric. This blog post introduces the Wasserstein metric perfectly and this blog post explains its relevance to Generative Adversarial Networks.

The Wasserstein metric is differentiable almost everywhere. Almost everywhere (a.e.) is a technical term related to a set having measure zero. It states that the elements for which the property (in this case being differentiable) is not valid has measure zero. Another example of a function that is differentiable a.e. is a monotonic function on \([a,b] \rightarrow \mathbb{R}\).

The Chamfer pseudo-distance (C) measure is another measure which calculates the squared distance between each point in one set to the nearest neighbour in the other set:

\[d_{C}(P,Q) = \sum_{i=1}^m \min_j d_{i,j}^2 + \sum_{j=1}^n \min_i d_{i,j}^2\]It’s stated that \(d_{C}\) is more efficient to compute than \(d_{EM}\).

Immediately we can observe from these metrics that they are not invariant with respect to rotations, translations, or scaling.

Comparison metrics

To compare shapes in a 3D space we can follow different strategies. We describe three methods that take the spatial nature into account and that compare over sets of 3D objects (so we can compare set \(P\) with set \(Q\)):

- Jensen-Shannon divergence.

- Coverage

- Minimum Matching distance.

Jensen-Shannon

First of all, we can align the point cloud data along the axis, introduce voxels and measure the number of points in each corresponding voxel. Then we use a distance metric using these quantities, in this case a Jensen-Shannon divergence.

\[d_{JS}(P||Q) = \frac{1}{2} D_{KL}(P||M) + \frac{1}{2} D_{KL}(Q||M)\]Here \(M = \frac{1}{2}(P + Q)\) and \(D_{KL}\) is the Kullbach-Leibler divergence metric.

To compare sets of point clouds we can do exactly the same. In this case the number of points in each voxel is just the collection of points across all point clouds in that set.

Coverage

Coverage is defined by the fraction of point clouds in \(P\) that are matched with point clouds in \(Q\). The match is defined by one the permutation invariant distance metrics. It’s not entirely clear how this is completely specified to me. Is there a threshold used that defines if it is a match? Or is it just a sum or average?

Minimum Matching distance

The minimum matching distance measures also the fidelity of \(P\) versus \(Q\) (compared to the coverage metric). This indeed uses an average over the (permutation invariant) distances between point clouds.

Generation

The pipeline followed by the authors is similar to that of PointNet. The pointcloud contains 2048 3D points. This data is fed into the encoder. The encoder exists of 1D convolutional layers (five of them) with a kernel size of 1 each of which are followed by a layer with ReLUs and one to perform batch normalization. After this pipeline an permutation-invariant max layer is placed. To read more on a 1D convolutional layer check the following clip by Andrew Ng. On just a 2D matrix a 1D convolutional layer would be just a multiplication by a factor. However, on 3D objects, it can be used to reduce layers or introduce a nonlinearity.

The decoder contains three layers, the first two followed by ReLUs.

Results

Some of the results from this work:

- The GAN operating on the raw data converges much slower than the GAN operating on the the latent variables.

- The latent GAN model using the AE with Earth Mover’s distance outperforms the one with the Chamfer pseudo distance.

- Both latent GAN models suffer from mode collapse. Wasserstein GAN does not…

Note that the Wasserstein metric is the same as the Earth Mover’s distance. So the above basically states that using Wasserstein distance makes sense for both the autoencoder as well as the GAN involved.

There are not yet many models that operate directly on point clouds. PointNet is one of the most famous ones. In a ModelNet40 shape classification task it has the following performance:

- 87.2% - Vanilla PointNet, without transformation networks

- 89.2% - PointNet, with transformation networks

- 90.7% - PointNet++, with multi-resolution grouping to cope with non-uniform sampling densities

- 91.9% - PointNet++, with face normals as additional point features

And the paper’s performance:

- 84.0% - Earth Mover’s distance

- 84.5% - Chamfer distance

It is not clear to me why they don’t list the results of the PointNet and PointNet++ papers which they both cite. They should have definitely told why it cannot be compared if they think that’s the case.

Promising research direction

One of the most obvious improvements seem to be the choice of the Wasserstein metric in different parts of the architecture. Another paper that caught my interest is Large Scale GAN Training for High Fidelity Natural Image Synthesis (pdf) under review at ICLR 2019.

An interesting aspect of this paper is that they sample from a different distribution while testing versus while training. They introduce a “truncation trick”. It samples from a truncated Normal distribution rather than \(N(0,\sigma)\) for the latent variables. (The values above a particular threshold are just sampled again until they are below that threshold.) I don’t completely get this. What’s going on there? Is there a mode in the network that defines the prototypical dog and are other dogs defined by nonzero values in the latent variable space? Then this seem to show that the layer exhibits an non-intuitive decomposition of the task at hand. I would expect a zero vector to correspond to an “abstract dog” and have all nonzero parameters contribute in an attribute like fashion. This seems to be more prototype-like, similar to old-fashioned vector quantization.

They however also define other latent random variables (in appendix E). The censored normal \(\max[N(0,\sigma),0]\) is interesting. It reminds me of nonnegative matrix factorization. By using a nonnegative constraint the representation becomes additive (part-based) and sparse. That’s quite different from prototype-like methods.

In the last few months I’ve been trying nonparametric extensions to the latent layer, but these experiments do not seem to be very promising.

A promising research direction might be to study autoencoders where the latent variables are such that they exhibit the same nonnegative (part-based representation) features. When we have a latent layer that decomposes the input like this, it might become more valuable to subsequently have a nonparametric extension.